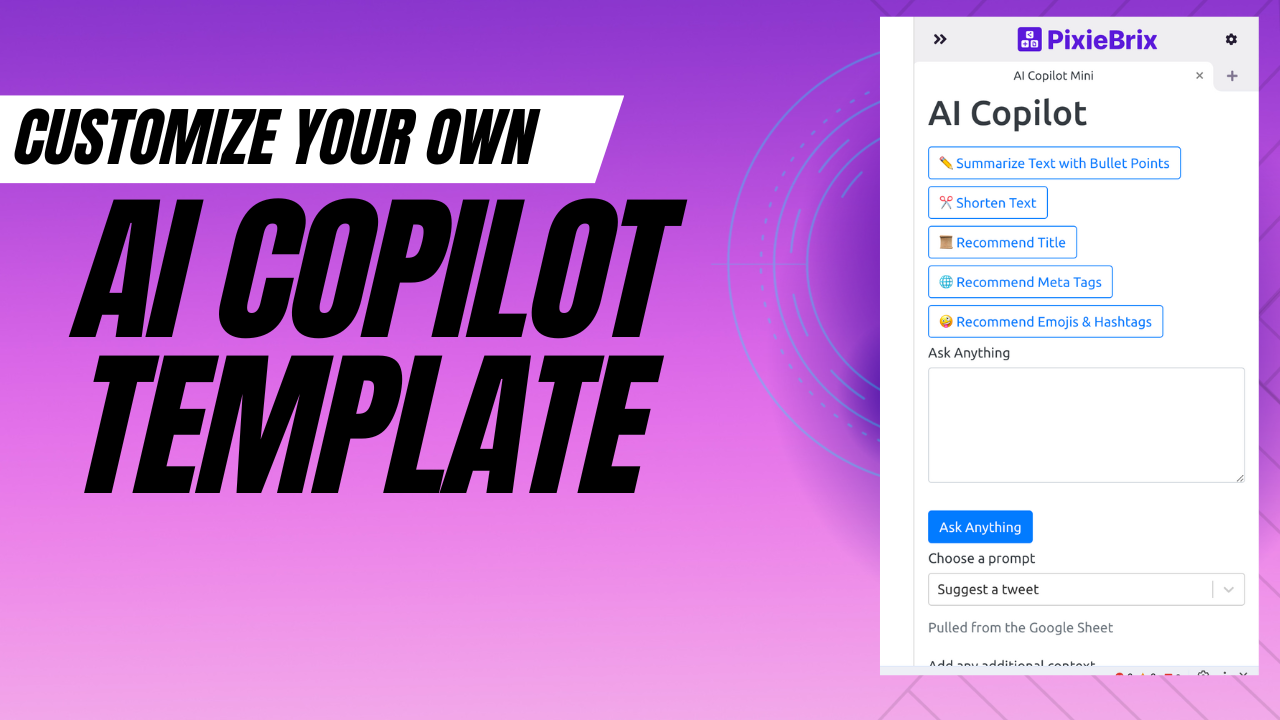

Customize AI Copilot

Build your own AI Copilot to run on any webpage you need. Access your favorite ChatGPT prompts, or create a detailed system prompt to perform specific tasks.

If you want to create your own AI Copilot, you can customize our template to build one to fit your specific needs.

Here are a few steps for customizing the PixieBrix AI Copilot mod template.

- Make a copy of the AI Copilot Mod Template

- Connect your own source data

- Customize the system prompt

- Use another LLM besides ChatGPT

- Increase the number of responses that are returned

📋 Make a Copy of the AI Copilot Mod Template

🏁 Before you can customize, you'll need to get the template in editable format.

💽️ Connect your own source data

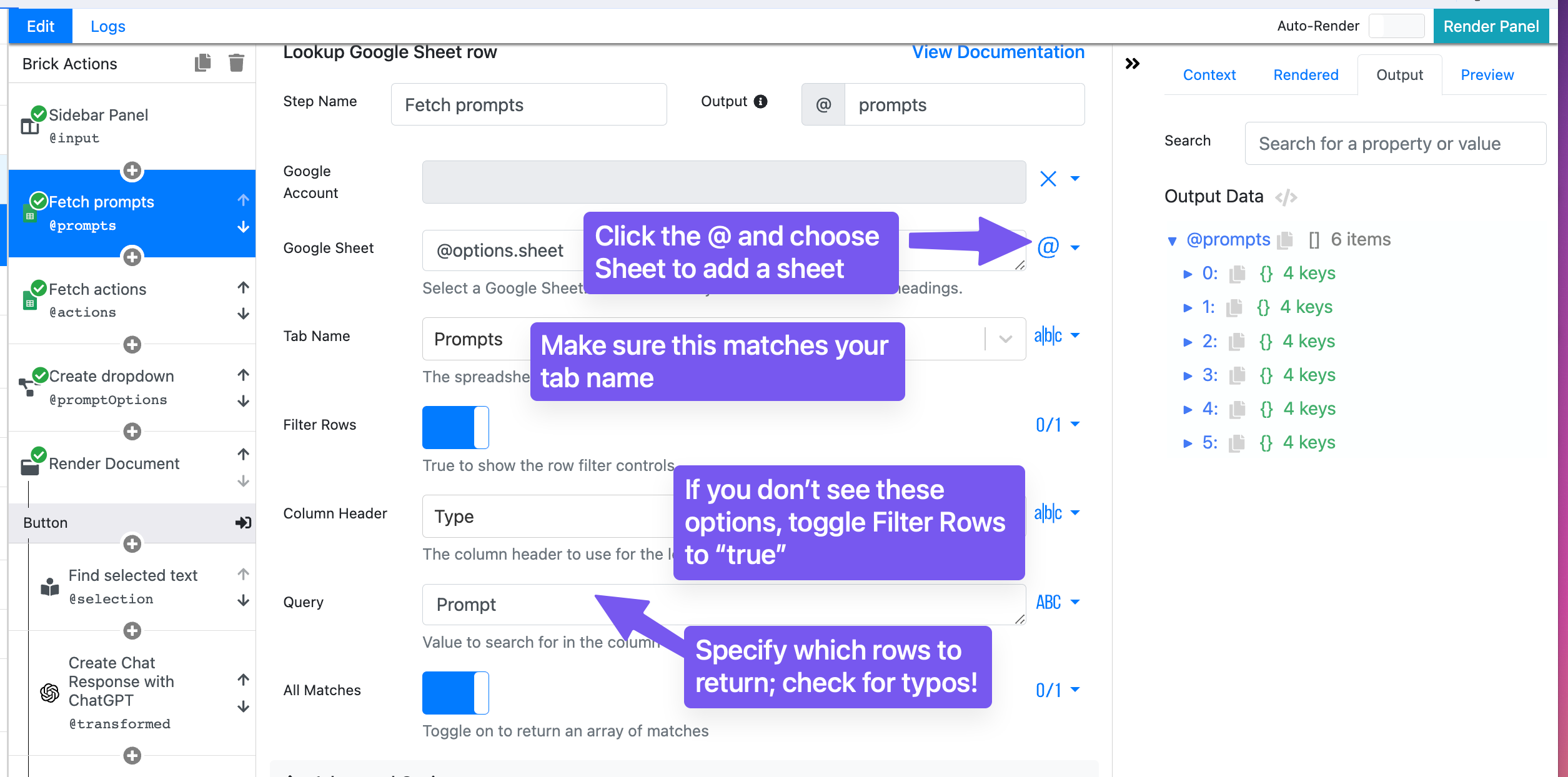

One of the first things you may want to customize is where your prompts come from. In the mod template, the prompts are defined in a Google Sheet, and we use the Lookup Google Sheet row brick (renamed Fetch prompts) to query a Google Sheet.

You can see how it works in this screenshot.

You might need to modify the query if your Google Sheet is set up differently than our template. Click the Fetch prompts brick and check out the Brick Configuration Panel in the middle of the Page Editor.

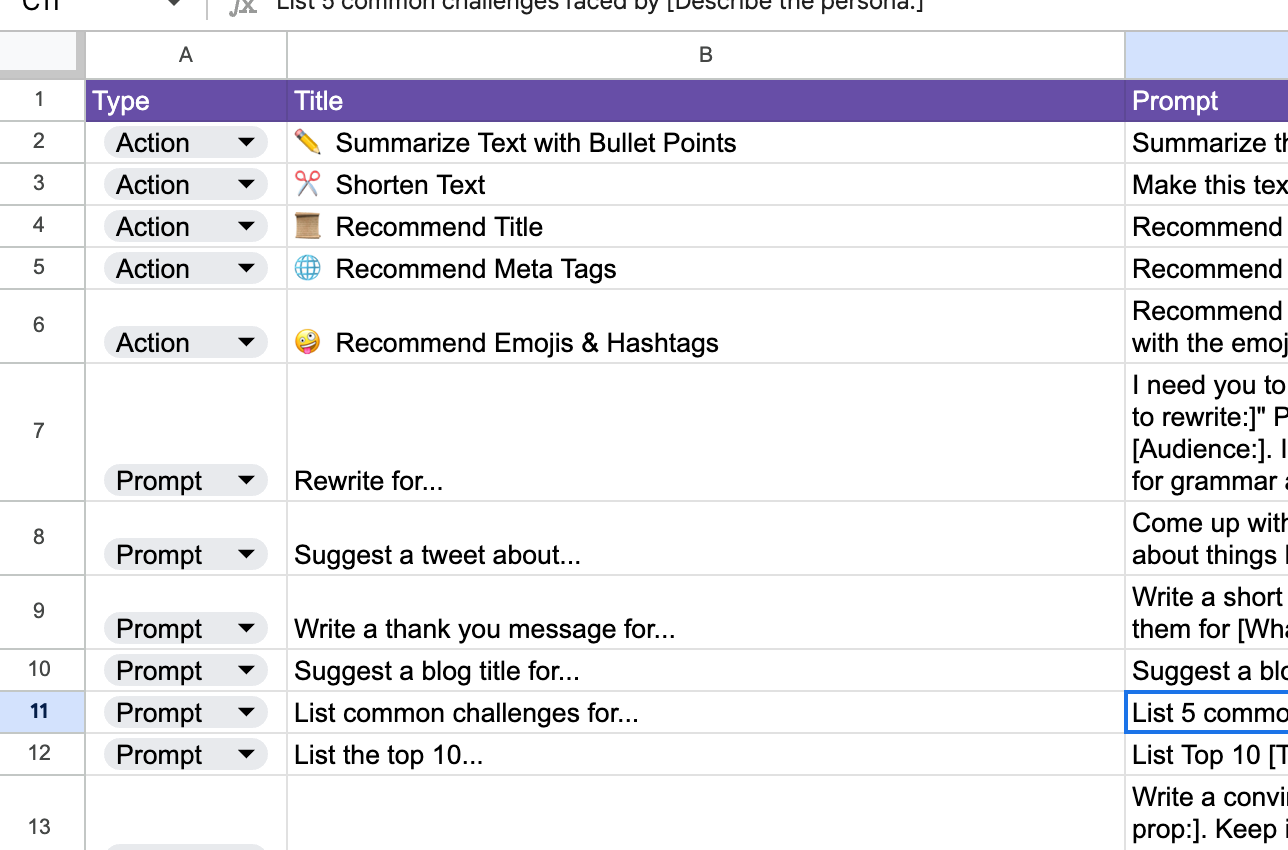

You'll notice we have two bricks like this, one for fetching the prompts (any items we want to appear in the dropdown, such as pre-set prompts to access quickly). And another similar brick that's instead fetching actions (prompts that will be applied to selected text on a page). This is how we distinguish between them in the Google Sheet.

Configure your Google Sheet brick to match the spreadsheet, tab name, column header, and query that will return the prompts vs. actions, and you're all set!

You also might want to fetch prompts from other tools entirely, such as:

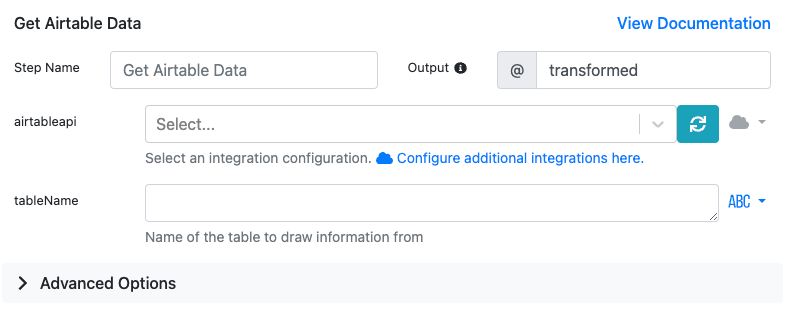

Connect to Airtable

First, add an Airtable integration configuration. Use the Get Airtable Data brick to fetch prompts you've defined in an Airtable database. You'll need to add a jq - JSON processor brick afterward and use JQ to filter the data by prompts vs. actions.

Connect a PixieBrix database

You can store your prompts in a PixieBrix database and use the List All Records brick to return all your FAQs. PixieBrix databases have access control, allowing you to specify which team members should have access.

Like Airtable, you'll need to use the JQ brick to filter the data by prompts vs. actions.

Connect to other tools

If you want to use another tool to fetch your prompts, you can search for an existing brick by clicking the + button under any brick in the Brick Actions Pipeline.

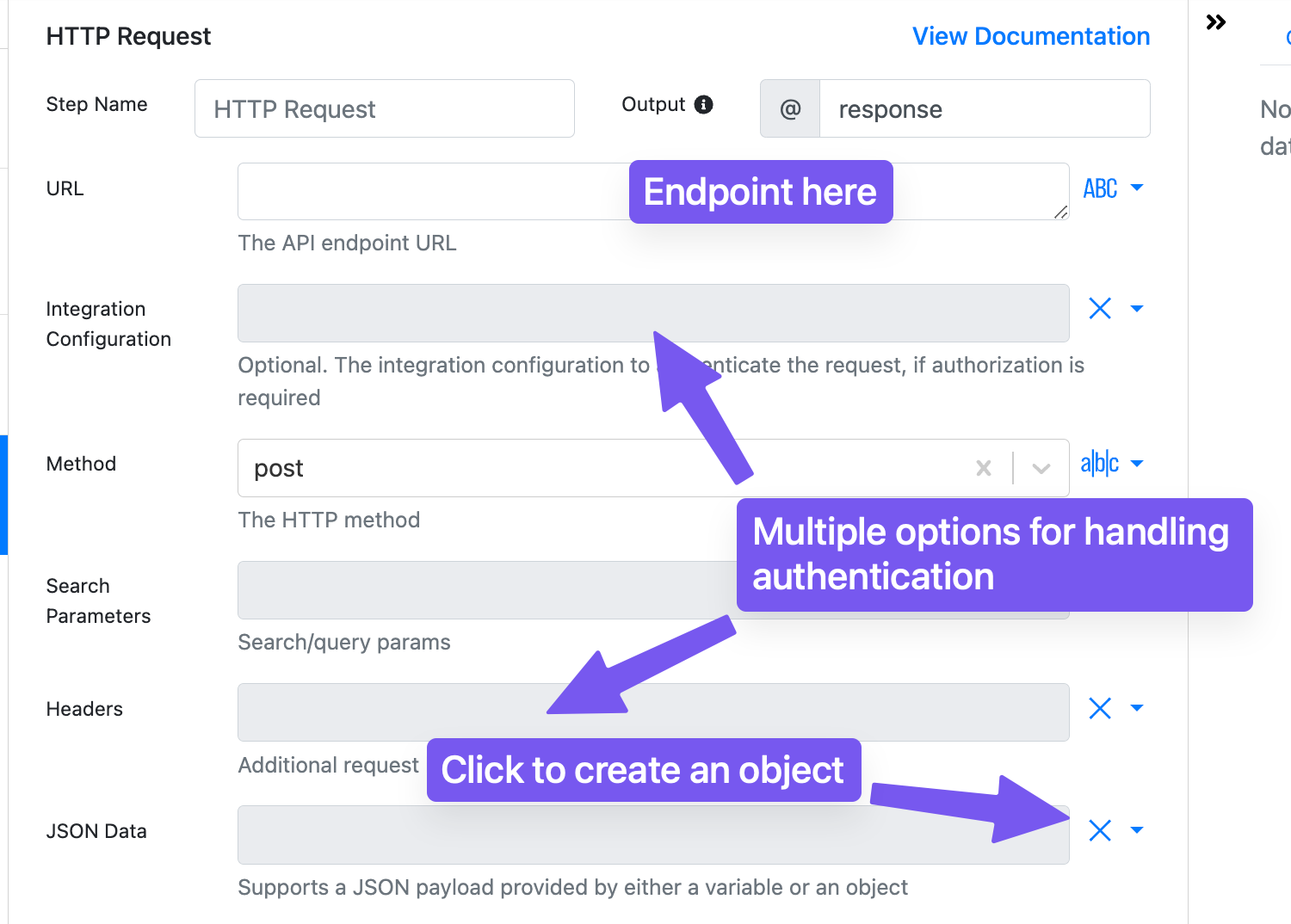

If the tool you're searching for isn't available as a brick, use the HTTP Request brick to fetch data from any API with PixieBrix.

🤖 Customize the System Prompt

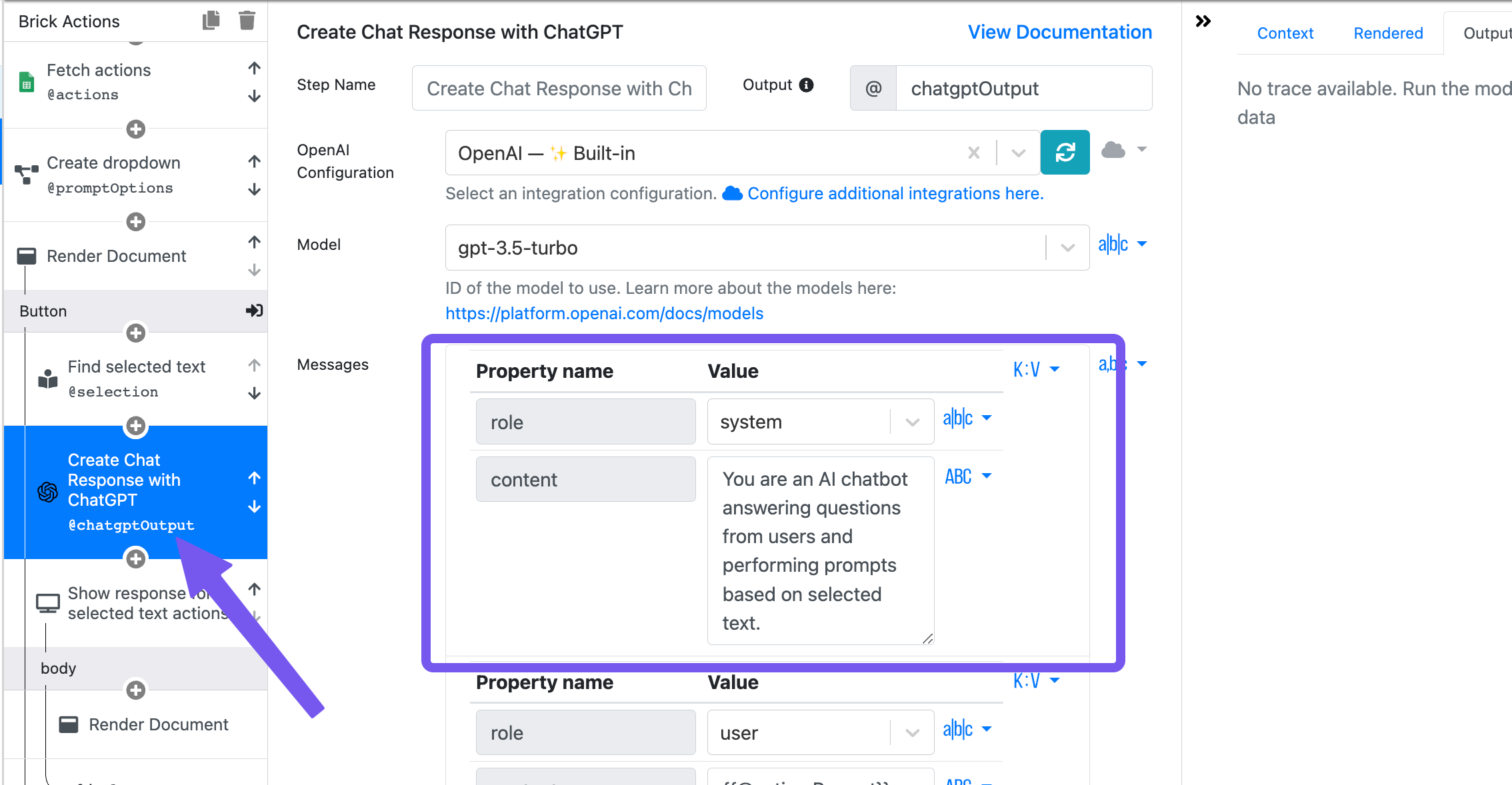

A system prompt is predefined instructions for an LLM that explain what it needs to do with queries from users. In the mod template, AI Copilot has a very basic system prompt.

You can see the system prompt set in each Create Chat Response with ChatGPT brick.

Depending on how you might use your copilot, you can customize the system prompt to be more specific and give it more context and instructions for the task the LLM will complete.

For example, if you want to use an AI Copilot for categorizing text, you might adjust your system prompt to explain that it will receive text and needs to categorize it. You could provide the categories and a few examples of sample text and how it should be categorized.

To change the system prompt, simply replace the text inside the content value in the Messages field on any ChatGPT brick.

Testing your prompts and ensuring they produce the desired output is always a good practice. If you get unexpected results, you may want to adjust your system prompt further. You can learn more about prompt engineering in our docs.

🔄 Use another LLM besides ChatGPT

In this mod template, we use ChatGPT, but there are other LLMs out there that you might want to use.

In this case, we recommend using our HTTP Request brick and reviewing documentation for your favorite LLM's API. To do this, remove the Google Sheet bricks and click the + button. Search for the HTTP Request brick, then fill it out accordingly.

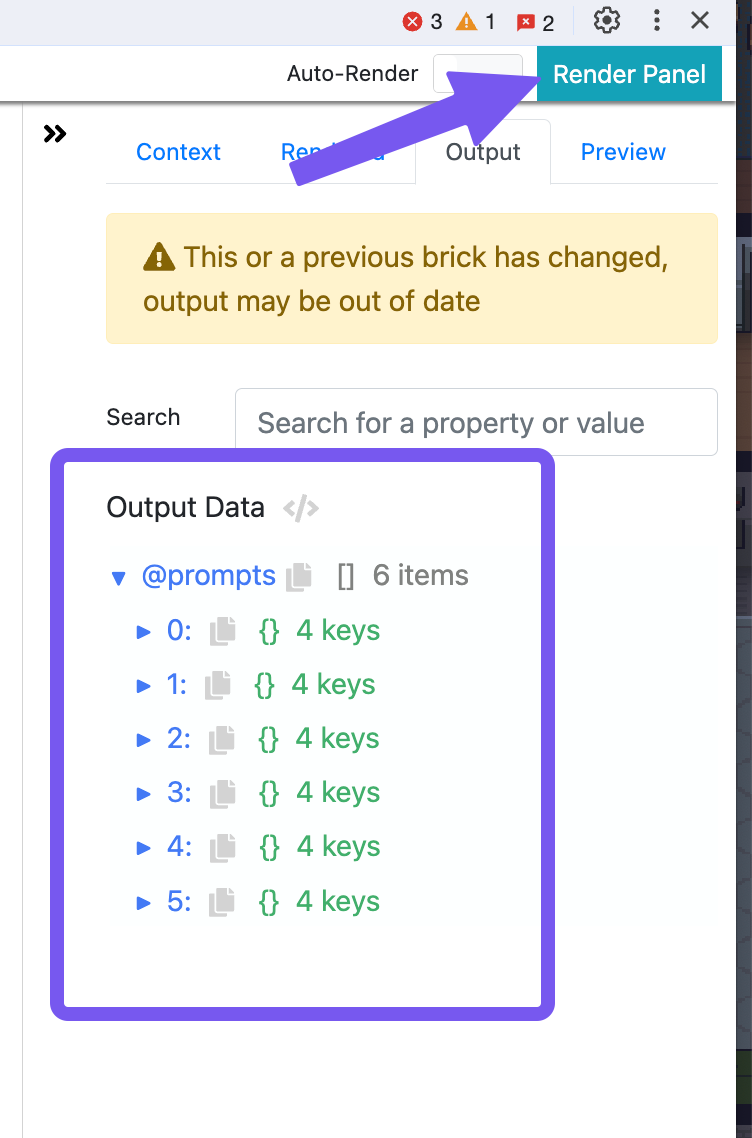

To test it out, click the Render Panel button in the top right corner of the Page Editor.

Check the output to confirm that you've received your expected response. If not, you may need to tweak the HTTP request.

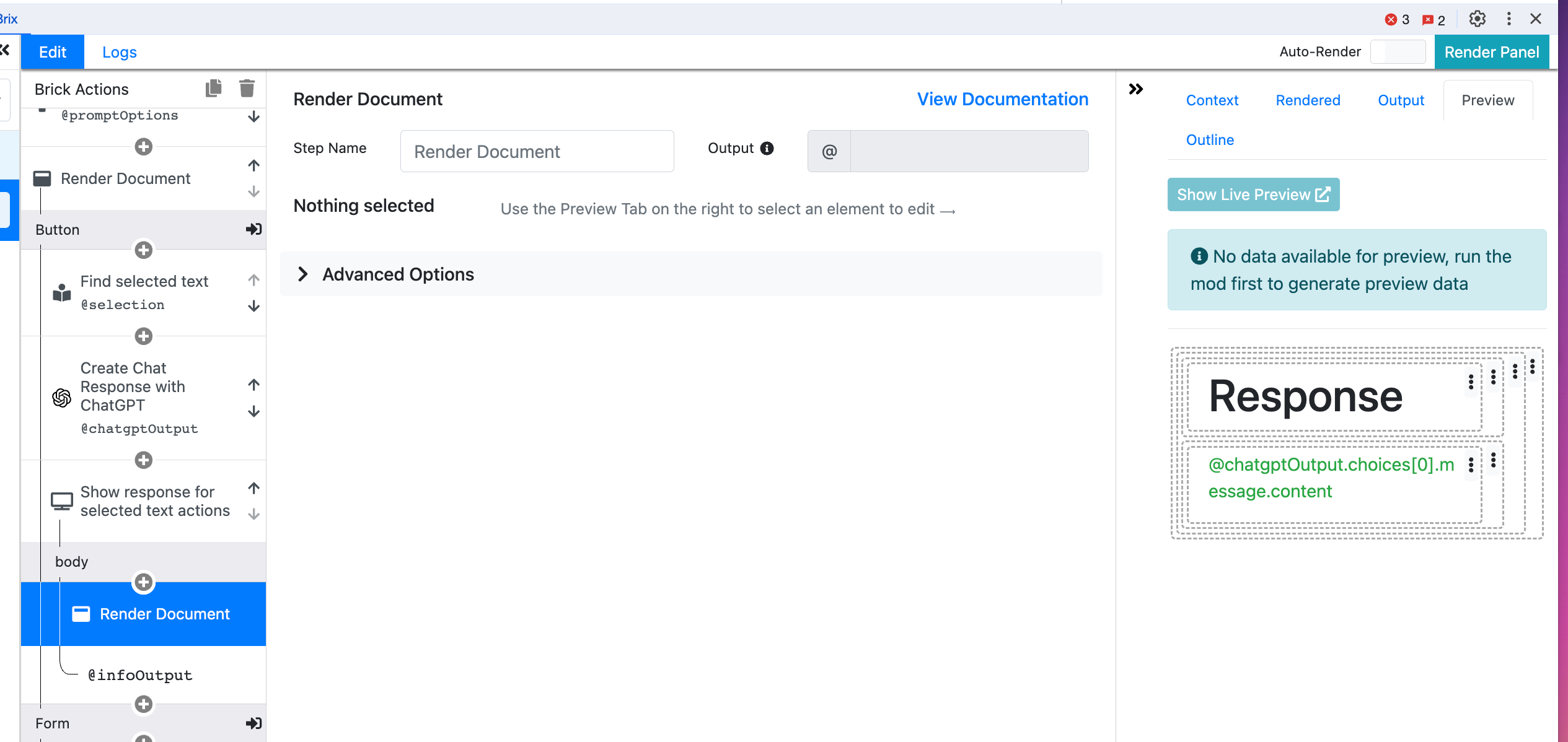

Once you've confirmed your response is what you expect, you'll need to go to the bricks that display the AI response and update with the path from the HTTP request instead of the ChatGPT brick.

You'll find these bricks nested inside Display Temporary Information bricks (renamed to Show response for... in this example). You'll know you're in the right place when you see Response and @chatpgtOutput.choices[0].message.content in the Preview Panel.

Keep scrolling because there are two others you'll need to update! Just keep looking inside every instance of Display Temporary Information (renamed to Show response for... in this mod template.)

Run your mod again, select a prompt, and confirm that you can view the response in your sidebar.

➕ Increase the number of responses that are returned

Robots aren't perfect; sometimes, they require a couple of tries to get something right. With the existing configuration in the template, your copilot will only return one response (called a completion) from ChatGPT, but you can set it to show more if you'd like.

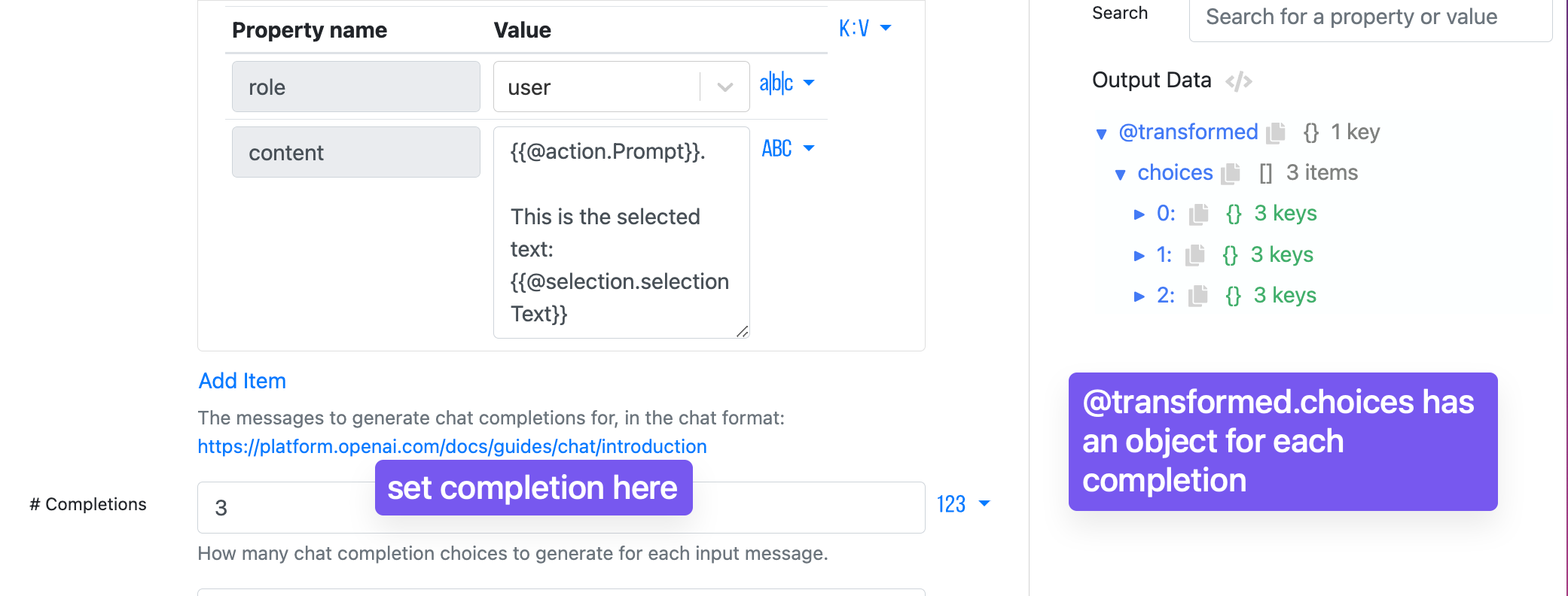

Step 1. Set the number of completions in the ChatGPT brick.

Go to the ChatGPT brick that you want to change (or all of them!) and adjust the # Completions field (below Messages) from 1 to whatever you'd like, such as 2 or 3.

Step 2. Change from text to list element in the Render Document brick.

Go to the Display Temporary Information brick below the ChatGPT brick. Click the Render Document brick, click the green text element in the preview panels, and finally, click the trash icon to delete this text element.

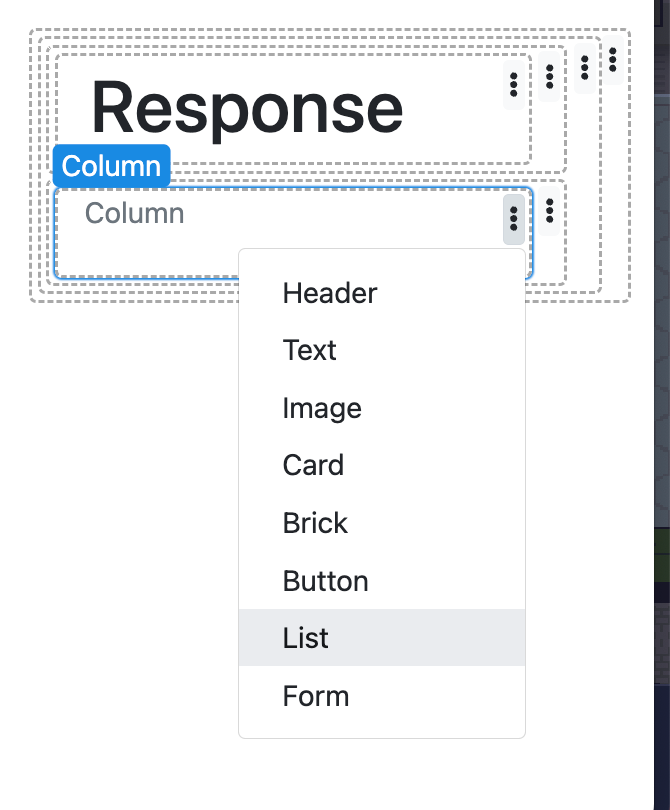

Next, click the three-dot menu in the existing Column element and select a List element.

Click the newly created List element and set the Array value to @chatgptOutput.choices, which is the array containing responses from each completion.

Click the text element that appears inside and set the content to @element.message.content.

Re-render your panel; now you'll see three blocks of text with responses instead of just one!

Make sure to update the Render Document bricks inside the Display Temporary Information bricks for all the ChatGPT bricks you updated with multiple completions.

With our AI Copilot Template, you can build your own AI Copilot to run on any webpage you need. Access your favorite ChatGPT prompts, or create a detailed system prompt to perform specific tasks.

🎉 Congrats! You've successfully modified an AI Copilot Mod Template. But this is just the start. You can build anything else on top of this!